Geolocation via Pipelines in Graylog

To the delight of most Graylog users, geolocation is automatically built into the platform via the "GeoIP Resolver" plugin. All that is needed is a MaxMind database and you are ready to roll. However, there is a better way of going about geolocation that might be worth implementing if you are a Graylog power user: lookup tables & pipelines.

But there's a plugin...

You may wonder why it is worth considering building out a lookup table and pipeline rules when there is essentially an "easy button" right on the configuration page. If you are a heavy Graylog user than there are a few major advantages to performing geolocation via pipelines instead of the plugin.

1. Geolocation Fields

When the GeoIP Resolver runs, it creates several new fields based on the MaxMind database. These new fields contain the coordinates of the IP, the country code, and the city. Though these are the key pieces of information you are likely interested in, there is so much more data in the MaxMind database that can be access that might be useful to you.

"single_value": "52.3529,4.9415",

"multi_value": {

"city": {

"confidence": null,

"geoname_id": 2759794,

"names": {

"de": "Amsterdam",

"ru": "Амстердам",

"pt-BR": "Amesterdão",

"ja": "アムステルダム",

"en": "Amsterdam",

"fr": "Amsterdam",

"zh-CN": "阿姆斯特丹",

"es": "Ámsterdam"

}

},

[...]

"country": {

"confidence": null,

"geoname_id": 2750405,

"iso_code": "NL",

"names": {

"de": "Niederlande",`

"ru": "Нидерланды",

"pt-BR": "Holanda",

"ja": "オランダ王国",

"en": "Netherlands",

"fr": "Pays-Bas",

"zh-CN": "荷兰",

"es": "Holanda"

}

},

"location": {

"accuracy_radius": 100,

"average_income": null,

"latitude": 52.3529,

"longitude": 4.9415,

"metro_code": null,

"population_density": null,

"time_zone": "Europe/Amsterdam"

},

"postal": {

"code": "1098",

"confidence": null

[...]

While the GeoIP Resolver chooses the fields to assign, using a pipeline you can extract any piece of information you want that is returned including timezone, postal code, or the name of the country in a different language.

2. Order of Processing

Depending on your configuration, the GeoIP Resolver plugin will either run before or after the pipeline processing happens. There is a good chance that it has been configured to run after pipeline processing if you perform normalization in order to ensure that the geolocation fields are also normalized.

If that is the case, then all pipeline processing happens prior to having the geolocation information associated with the message. If geolocation is done via a pipeline rule right after normalization occurs, the capability for interacting with the new fields in future pipeline rules becomes possible. The ways that this can be used are endless. For example, you could create a pipeline rule that characterizes inbound traffic logs from the firewall that do not have a country code of US (or whatever your country code is) as "foreign". Or if you want to route messages for inbound traffic from specific countries to a dedicated stream, you can do that. None of this is possible with geolocation occurring after pipeline processing.

3. Faster processing

From a processing & resource perspective, a pipeline leveraging a lookup table is going to have much faster and more efficient processing than the plugin. The plugin is designed to look for potential IPs using a regular expression. Essentially, if it fits the pattern *.*.*.* then it will be run through the plugin. In order to do this, the plugin has to read the entire contents of every field in every message to be sure there are no IPs. However, in pipeline rules you can leverage conditional statements to determine which messages get processed. For each message that passes the conditional check, you can then specify which specific fields contain IP addresses and explicitly process only those fields for geolocation. This is much faster and more efficient than having to search every message with a pattern.

Preparing the Lookup Table

This section will be a brief overview of getting the geolocation lookup table ready for use. It will not go into detail about what lookup tables, caches, and data adapters are so you should visit the Graylog documentation if you need more information on that.

1. Upload the MaxMind Database

As must be done with the plugin, a MaxMind Database must first be stored on your Graylog server. This is the same step mentioned in the first two paragraphs for the "Configure the database" section Geolocation article in the Graylog documentation.

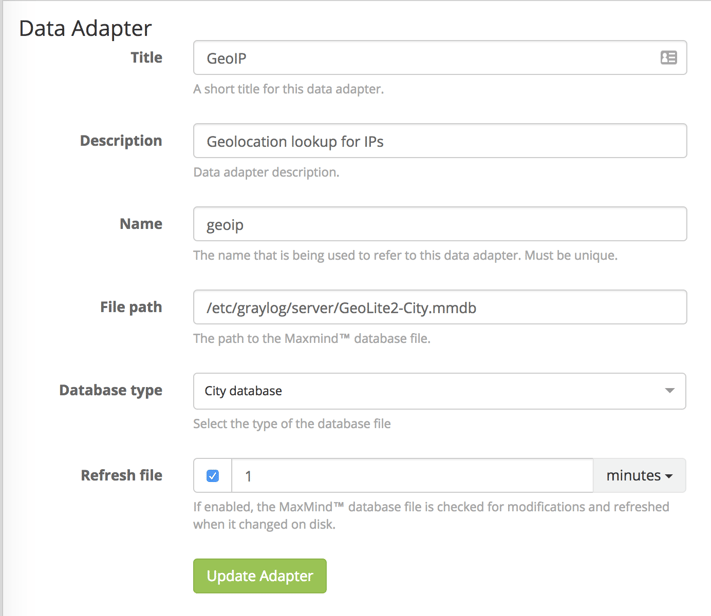

2. Create the Data Adapter

Lucky for us, because of the support for the GeoIP Resolver, Graylog has a built in Data Adapter Type for MaxMind Databases. Set the File path to wherever you put the mmdb file in step 1. Unless you have no interest in city & postal code information, leave the Database type set to "City database". The rest of the field should be self explanatory and you should end up with something like the below screenshot. Select "Create Adapter" to save the data adapter.

3. Create the Cache

A cache is used to define how long a key/value pair should be “remembered” before another lookup needs to be performed. The purpose of this is to avoid using computing resources to repeatedly lookup frequent values. Caches store lookups for a defined amount of time so that fewer API calls occur as the data can be pulled immediately from memory.

There are no special settings for geolocation for the cache, so you can refer to the lookup table documentation for how to configure this.

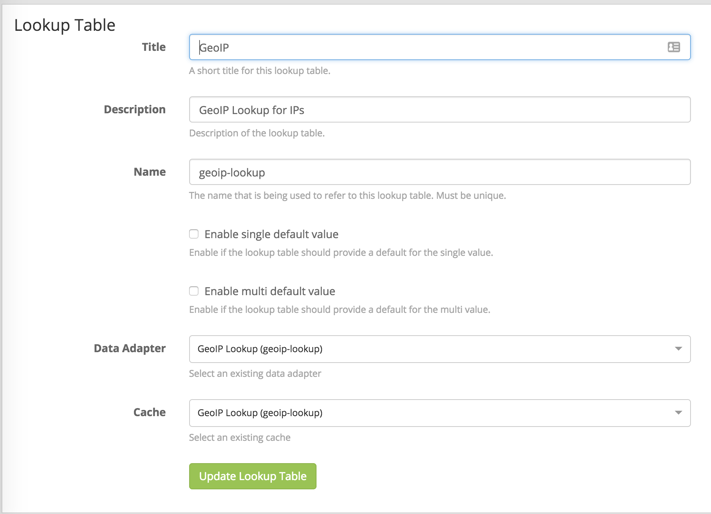

4. Create the Lookup Table

To create the lookup table itself, navigate to System > Lookup Tables and select “Create Lookup Table”. Fill out the name and description fields. Set the Data Adapter and Cache fields to those you created in steps 2 & 3, respectively. With everything filled out, the settings should align with the below screenshot. Select "Create Lookup Table" to save the lookup table and it is ready to be used in pipelines.

Creating the pipeline rules

While there are many ways to approach using the lookup table in a pipeline rule, the following section shows the method of creating rules for our two normalized IP fields and looking up the information we are interested in.

Note: This should be customized to match the field names you use in your own environment.

At this point we assume that we have normalized our fields to src_ip and dst_ip. These rules will also reference src_ip_is_internal and dst_ip_is_internal which are fields I have created in a separate pipeline that identifies RFC 1918 addresses.

First, we build out the "when" statement (our conditions). In this first rule, we are checking whether the message has a src_ip_is_internal field and whether that field is false. We are not interested in locating RFC 1918 addresses so we are excluding all "is_internal" address.

when

has_field("src_ip_is_internal") &&

$message.src_ip_is_internal == false

Now that we have a statement filtering our messages to only those that are relevant we can perform our lookups.

The first function is a let function which is setting a variable "geo" to the result of a lookup function. The lookup function first defines the lookup table (geoip-lookup) and then the field to perform the lookup against, the string representation of the src_ip field.

then

let geo = lookup("geoip-lookup", to_string($message.src_ip));

With the geo variable defined we can interact with the values in the "multi-value" JSON object (such as the first snippet from this post). Using set_field function we can create our geolocation information fields and map them to certain items from the lookup table. Below are some examples of the formatting and types of information that can be obtained.

set_field("src_ip_geolocation", geo["coordinates"]);

set_field("src_ip_geo_country_code", geo["country"].iso_code);

set_field("src_ip_geo_country_name", geo["country"].names.en);

set_field("src_ip_geo_city_name", geo["city"].names.en);

end

Put those sections together and you have a pipeline rule that will perform a geolookup and assign the values you wish to know to their own fields.

Here is an example of the entire rule but performed on any dst_ip fields.

rule "dst_ip geoip lookup"

when

has_field("dst_ip_is_internal") && $message.dst_ip_is_internal == false

then

let geo = lookup("geoip-lookup", to_string($message.dst_ip));

set_field("dst_ip_geolocation", geo["coordinates"]);

set_field("dst_ip_geo_country_code", geo["country"].iso_code);

set_field("dst_ip_geo_country_name", geo["country"].names.en);

set_field("dst_ip_geo_city_name", geo["city"].names.en);

end

These rules can of course be customized to be run against any IP-related fields and to extract any information contained in the MaxMind Database but the above is a simple starting point.

Closing Comments

While the steps included here may seem like a lot more work than checking "enabled" on the built-in plugin, the flexibility and efficiency provided by this method make it worth it.

Special thanks to Lennart Koopmann for taking the time to introduce me to multi-value lookups in pipelines. And of course thanks to Graylog for providing the community with such a powerful tool.

If you come up with any creative uses for leveraging geolocation fields in later pipeline stages I'd love to hear about it. You can reach out to me via Twitter (@megan_roddie).