The Infrastructure, II

After DEF CON last year, we posted this blog about our infrastructure, which was spread between a handful of Intel NUCs, and AWS. It was epic. It was shiny and new. We loved it.

And then we burned it all down. We wiped and repurposed the NUCS, and killed all the things in AWS.

Our team scouted out our new goods, we bought the hardware, slapped some wheels on it, and started anew.

Several of you have been asking for the deets. We've been holding off on writing this until after the con dust settled. So here we go.

One of the biggest changes this year...

Was that we weren't just running OpenSOC at DEF CON.

We were running NDR Crucible at Black Hat, with a day of "rest" in between that and running OpenSOC at the Blue Team Village.

We needed to scale even more, we needed student systems, we needed more variety, more scenarios, more surprises, more everything.

Including MOAR RAM! <3

Anyone who's ever relied on any cloud hosting at scale knows what their bank account looks like after months of dev-ing and testing (which is OpenSOC 99% of the year), or even just a month of prod-ing.

OpenSOC is no small environment. this year, we had somewhere around 50-60 windows systems, and roughly 70 linux systems.

This setup gave us PLENTY of horsepower to do all of the above without the monthly overhead. And total control.

One of the biggest milestones this year...

Was fully automated windows and DC builds.

After last year, I made it my goal:

At some point in the near future, we plan on automating a lot of our windows builds as well. one thing at a time!

And you guys, I felt like I pulled some magic out of a hat (I am not primarily a Windows nerd, far from it).

~40 of our windows systems were completely rebuilt nightly between training days at Black Hat and OpenSOC.

This meant windows, office, all of our random warez that we scattered throughout the environment, scenario artifacts, Graylog/osquery/OSSEC/Sysmon agents, traffic generation, all the things. So fresh and so clean. Every. Day.

One of the biggest hurdles...

Was the DEF CON and/or Blue Team Village network. Or a combination of both. We're not 100% sure where our problems started.

We got set up on Thursday, had everything humming, systems were testing out perfectly when I got up Friday morning. I health checked all the things, and walked to the village. And everything came to a screeching halt.

Intermittently, things just died. couldn't ping 8.8.8.8. couldn't ping 1.1.1.1. couldn't get outbound for a while. Apparently, we were being blocked and/or throttled to some degree. We couldn't get to anything in AWS at one point. We switched between a couple different VPNs, and even switched to cellular for a while. TL;DR - the DEF CON network had its share of issues that morning/afternoon, and as a result, everyone else's did, too.

Once we DID figure out that it was a DC and/or BTV network issue, we reworked a few things, and got ourselves sorted out. Everything was fine until we hit full capacity. More on that in a minute.

What stayed the same...

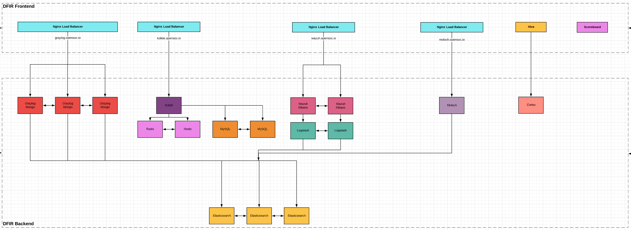

Was a large part of our DFIR systems.

GRAYLOG

We still had a Graylog cluster backed by an Elasticsearch cluster.

It was ginormous and hardly using half of its resources, which is why we were so surprised when we got to DEF CON, after a completely smooth Black Hat experience, and things started to hiccup.

Turns out, even the Graylog team was surprised. We had more than double the participants this time around (a good problem to have!), which meant hundreds more people threat hunting on our Graylog systems, which meant java was struggling.

Thanks to the amazing nerds on the Graylog team, all our problems were quickly solved.

Turns out, there's a little setting called http_thread_pool_size that made all the difference. our thread pools were being exhausted with so many people searching on the frontend, and Graylog couldn't event talk to its own nodes internally.

A few tweaks and a rolling restart, we were back in business!

Link - https://www.graylog.org

KOLIDE

We still had Kolide backed by Redis and MySQL. We are huge fans of Kolide, and that UI is <3

Kolide in and of itself didn't have issues. What did have issues... was osquery. and we're still looking into a better fix for that.

A half a dozen users would query a single windows system, and the osquery agent on it would come to a halt. Which meant no one could hunt on it.

We crafted a fix to clean and restart those agents on a schedule, and that worked for the duration of the event(s). This is by no means a long term fix, but it worked well enough in this case.

Link - https://kolide.com/

MOLOCH

We still had full packet capture in the range--Moloch is a huge piece of OpenSOC. this year we integrated Suricata, and that allowed us to capture so many more artifacts in each scenario.

Traffic analysis, IDS alerts/signatures, PCAPs, rendering sekrit images in the moloch UI, built in CyberChef... so much to be gained from this tool. We are huge fans.

Link - https://molo.ch/

What was different...

We switched some things up this year.

THE SCOREBOARD

It seems like this has been the bane of our existence a few times now.

Is there a stat for how many people asked shortstack if the scoreboard was down on Slack?

— Cake (@ILiedAboutCake) August 11, 2019

But. Of all the scoreboards we tried in the past (many), this one was ultimately the most successful in that it met all of our needs (regarding scenarios, hints, points, deductions, user administration, backups, categories, the list goes on). It just crumbled under heavy load.

We're planning on coming prepared with a custom solution next year.

SCENARIOS

If you played OpenSOC before, you may have seen a few similarities. But we brought about twice the material to DEF CON this year, with at least triple the artifacts to uncover.

More material means more for you guys to sink your teeth into, more tools and tactics to learn.

Our team worked unbelievably hard to craft true to life stories, backing real scenarios, depicting real APT's. The behind the scenes red teaming efforts put into this year deserve a blog post all their own.

WAZUH

We didn't use Wazuh/OSSEC this year. We don't get a lot of unique artifacts out of it for the amount of effort and resources it takes to have it in the range, so we let that one go this time around.

It's a great tool to have in your environment (especially for compliance purposes, lots of wins there), but it doesn't lend much to threat hunting that we can't already get out of the above tools.

Link - https://wazuh.com/

Black Badge

As the saying goes, hard work pays off. We were officially made a black badge contest this year. Our first year as an official DEF CON contest, and we did it!

We were as surprised as the rest of you, as the finalists found out only minutes after we did :)

I mean, Dark Tangent did tell us to bring it...

Thank you

There are so many moving parts in OpenSOC, and we wouldn't have been able to accomplish any of this without everyone on this team. It truly is a group effort, and a labor of love.

To everyone who participated, has asked to participate, showed us <3 at events, provided feedback or constructive criticism, fresh ideas, we thank you.

We do this for you guys, and we hope you enjoy it as much as we do.

We can't wait to see you at an OpenSOC event in the future! And if you're interested in further training, please check out our other offering, Recon NDR.